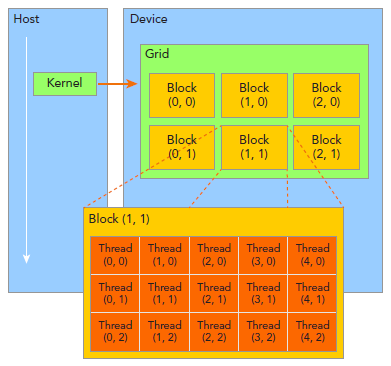

The return value of is a NumPy-array-like object. Dg (type dim3) specifies the dimension and size of the grid. The first argument in the execution configuration specifies the number of thread blocks in the grid, and the second specifies the number of. In the CUDA programming model we speak of launching a kernel with a grid of thread blocks.

DIM3 GRID CUDA SOFTWARE

#define pos2d(Y, X, W) ((Y) * (W) + (X)) const unsigned int BPG = 50 const unsigned int TPB = 32 const unsigned int N = BPG * TPB _global_ void cuMatrixMul ( const float A, const float B, float C ) Hands-on: Porting matrix multiplier to GPU (25 minutes) More on CUDA programming (40. In CUDA there is a hierarchy of threads in software which mimics how thread processors are grouped on the GPU. Write by the host and slower to write by the device.

To write by the host and to read by the device, but slower to

DIM3 GRID CUDA PORTABLE

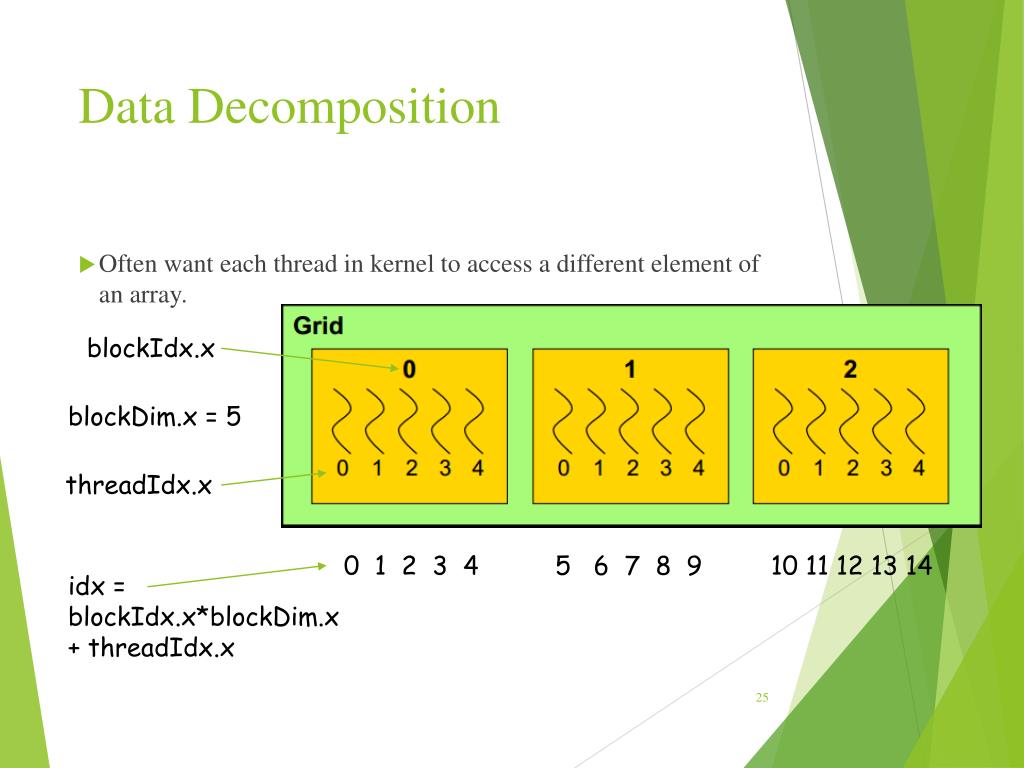

portable – a boolean flag to allow the allocated device memory to be.Just because a language feature exists doesn’t mean that programmers find it particularly useful or that it is the best fit for common use cases. A grid can have 1 to 65535 blocks, and a block (on most devices) can. mapped_array ( shape, dtype=np.float, strides=None, order='C', stream=0, portable=False, wc=False ) ¶Īllocate a mapped ndarray with a buffer that is pinned and mapped on Follow up question, why is a 3D grid dim3(x, y, z) almost never used I can’t find any good documentation, nor any good examples, stackoverflow posts, or random blog spots that utilize CUDA this way. A grid can contain up to 3 dimensions of blocks, and a block can contain up to 3 dimensions of threads. pinned_array ( shape, dtype=np.float, strides=None, order='C' ) ¶Īllocate a numpy.ndarray with a buffer that is pinned (pagelocked). device_array ( shape, dtype=np.float, strides=None, order='C', stream=0 ) ¶Īllocate an empty device ndarray. For more efficient processing, we group samples into batches of 1000 samples each. Each sample consists of 1024 data points. Batches of 8-bit fixed-point samples are input to the DSP pipline from an A/D converter. The following are special DeviceNDArray factories: numba.cuda. Figure 1: The processing pipeline for our example before and with CUDA 6.5 callbacks. copy_to_host ( ary=None, stream=0 ) ¶Ĭopy self to ary or create a new numpy ndarray The memory is always a 1D continuous space of bytes. Python Grid Python igraph Python R Python whiledatetime. copy_to_host ( stream = stream ) DeviceNDArray. Cuda block/grid dimensions: when to use dim3 The way you arrange the data in memory is independently on how you would configure the threads of your kernel.

0 kommentar(er)

0 kommentar(er)